Scaling Your n8n Workflows

n8n is a powerful workflow automation tool that allows you to connect various services and build complex automations with ease. While getting started with a single Docker container is straightforward, production workloads often demand more: high availability, fault tolerance, and the ability to handle a large volume of workflow executions. This post explores how to scale n8n effectively, moving from a local setup to a robust Kubernetes deployment using PostgreSQL as a persistent datastore.

The journey involves understanding n8n's execution modes and leveraging a distributed architecture to meet growing demands.

Getting Started Locally: The All-in-One Container

For development or small-scale use, n8n runs perfectly as a single Docker container. This setup is quick to launch and manages its own state, typically using an embedded SQLite database.

You can get up and running with these commands:

docker volume create n8n_data

docker run -it --rm --name n8n -p 5678:5678 -v n8n_data:/home/node/.n8n docker.n8n.io/n8nio/n8n

ccess your local n8n instance at http://localhost:5678.

By default, this local instance uses SQLite to save credentials, past executions, and workflows. While convenient, for scalable and resilient deployments, a more robust database like PostgreSQL is recommended, along with a different architectural approach.

The Bottleneck: Single Instance Limitations The all-in-one container, while excellent for simplicity, has limitations:

Single Point of Failure: If the container crashes, your automations stop. Limited Scalability: It can only process as many workflows as a single instance can handle. Downtime During Updates: Updating the n8n version typically involves stopping the container. To overcome these, n8n supports a "queue mode" architecture.

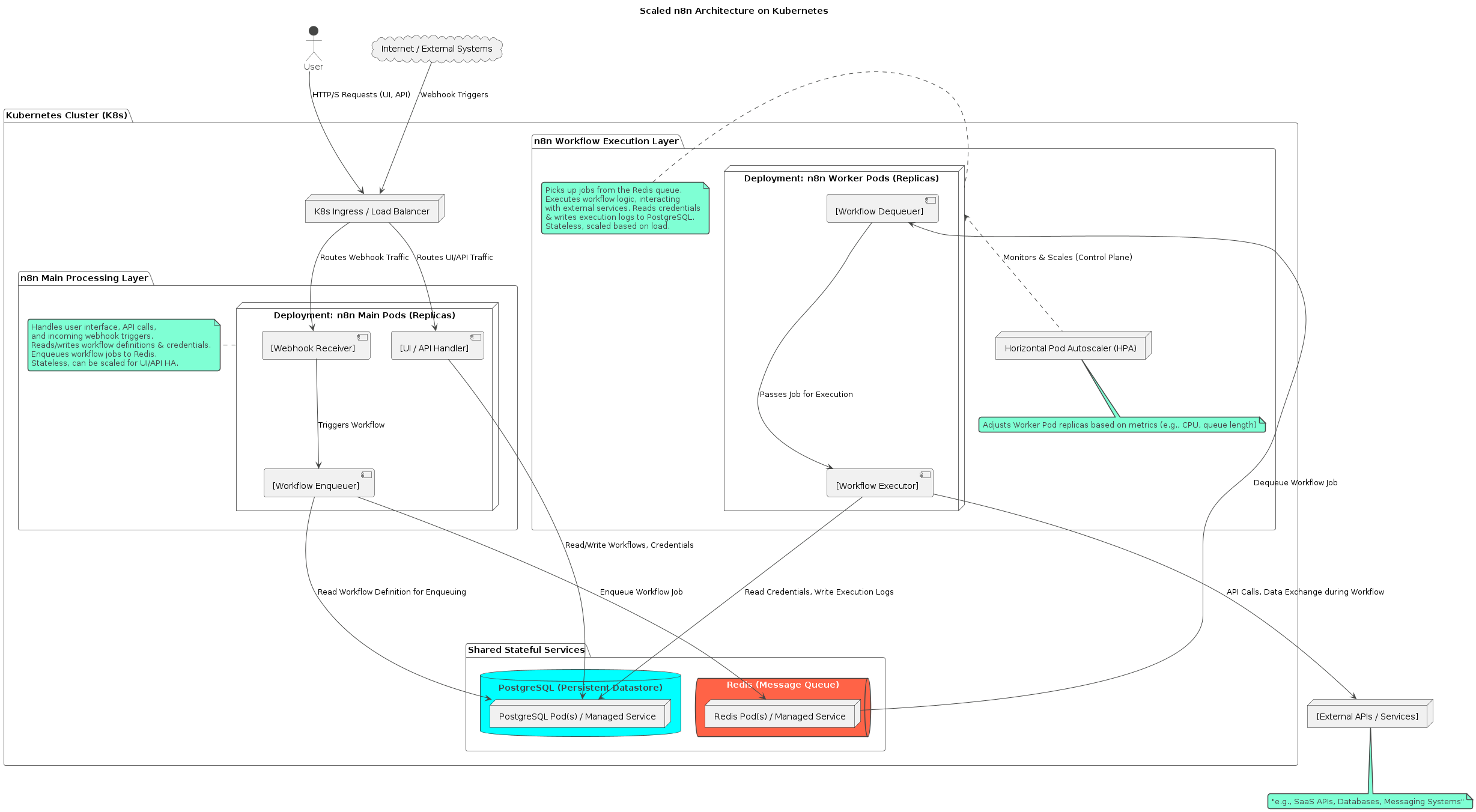

Scaling with Queue Mode: The Distributed Architecture

Queue mode decouples the main n8n application (handling UI/API and workflow enqueuing) from the actual workflow execution. This allows for independent scaling of components.

The core components in a scalable n8n setup are:

Main n8n Instance(s)

Handles UI, API requests, webhook triggers, and adds workflows to the queue. Can be replicated for HA.

Redis

Acts as a message broker (queue) for pending workflow executions.

Worker n8n Instance(s)

Picks up workflows from Redis and executes them. Can be scaled horizontally based on load.

PostgreSQL

Shared persistent database for workflows, credentials, and execution logs.

Deploying n8n on Kubernetes

Kubernetes (K8s) is an ideal platform for deploying this distributed n8n architecture. Here's how the components map:

Main n8n Pods (UI/API & Enqueuing)

- A K8s Deployment running the standard n8n image.

- Configure with environment variables for PostgreSQL connection, Redis connection, and the

N8N_ENCRYPTION_KEY. - Set

EXECUTIONS_MODE=queueandQUEUE_BULL_REDIS_HOST(and other Redis related vars). - Expose via a K8s Service and Ingress for external access.

- Can run multiple replicas for UI/API high availability.

Worker n8n Pods (Execution)

- A separate K8s Deployment running the n8n image, but started with the

n8n workercommand (or by settingN8N_WORKER_PROCESS=mainif using newer versions and specific entrypoints). - Configure with the same environment variables for PostgreSQL, Redis, and

N8N_ENCRYPTION_KEY. - Set

EXECUTIONS_MODE=queue. - These pods do not need external exposure.

- Scale this deployment horizontally (e.g., using a Horizontal Pod Autoscaler - HPA) based on CPU/memory usage or queue length.

PostgreSQL Database

- Deploy as a K8s StatefulSet for persistent storage, or use a managed PostgreSQL service from your cloud provider (e.g., AWS RDS, Google Cloud SQL).

- Ensure n8n pods can connect to it. Environment variables for n8n:

DB_TYPE=postgresdbDB_POSTGRESDB_HOSTDB_POSTGRESDB_PORTDB_POSTGRESDB_DATABASEDB_POSTGRESDB_USERDB_POSTGRESDB_PASSWORD

Redis

- Deploy as a K8s Deployment + Service, or use a managed Redis service.

- Ensure n8n main and worker pods can connect to it. Environment variables for n8n:

QUEUE_BULL_REDIS_HOSTQUEUE_BULL_REDIS_PORTQUEUE_BULL_REDIS_PASSWORD(if applicable)QUEUE_BULL_REDIS_DB(if applicable)

Key Configuration: N8N_ENCRYPTION_KEY

It is absolutely critical that all n8n pods (main and worker) use the exact same N8N_ENCRYPTION_KEY. This key is used to encrypt and decrypt sensitive data like credentials. Store it securely, for example, in a K8s Secret.

Benefits of Scaled n8n on Kubernetes

- High Availability: Multiple main pods and worker pods prevent a single point of failure. K8s can automatically restart failed pods.

- Horizontal Scalability: Easily scale the number of worker pods up or down based on workflow execution demand.

- Improved Performance: Distributing the load across multiple workers and a dedicated database improves responsiveness.

- Resilience: The queue ensures that workflows are not lost if a worker temporarily goes down; they will be picked up by another worker or when the worker recovers.

- Rolling Updates: Update n8n versions with minimal or zero downtime using K8s deployment strategies.

Next Steps & Considerations

- Helm Charts: Look for official or community n8n Helm charts to simplify Kubernetes deployments.

- Monitoring & Logging: Implement robust monitoring (e.g., Prometheus, Grafana) and centralized logging (e.g., ELK stack, Loki) for your n8n cluster.

- Resource Management: Define appropriate resource requests and limits for your n8n pods in Kubernetes.

- Security: Secure your K8s cluster, network policies, and sensitive data like the encryption key and database credentials.

By adopting a distributed architecture on Kubernetes, you can transform your n8n setup into a scalable and resilient automation powerhouse, ready to handle demanding enterprise workloads.